I expected a little more from J. J. Abrams’s talk at TED.com. My first disappointment was in realizing that the presentation is almost a year old: he gave it in March 2007, and waiting till now to air it smacks of a publicity push for Cloverfield, the new monster movie produced by Abrams and directed by Matt Reeves, set for release one week from now (or as teaser images like the one above would have it — striving for 9/11-like gravitas — 1.18.08).

The second disappointment came from the disconnect between the content of the talk and the mental picture I’d formed based on the blurb:

There’s a moment in J.J. Abrams’ amazing new TEDTalk, on the mysteries of life and the mysteries of storytelling, where he makes a great point: Filmmaking as an art has become much more democratic in the past 10 years. Technology is letting more and more people tell their own stories, share their own mysteries. Abrams shows some examples of high-quality films made on home computers, and shares his love of the small, emotional moments inside even the biggest blockbusters.

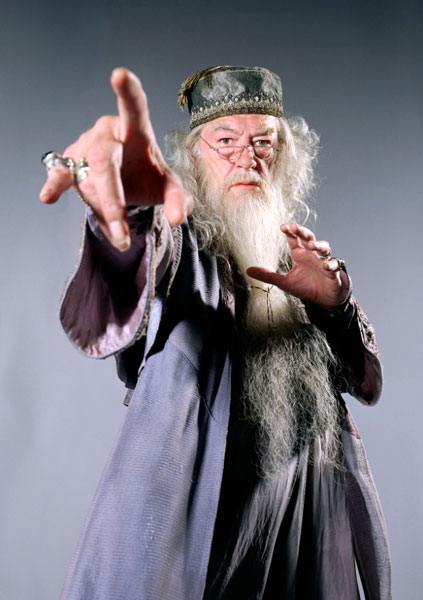

Somehow I took these innocuous words as promise of some major revelation from Abrams, a writer-producer-director-showrunner on whose bandwagon I’ve been all to happy to hop. Alias was a great show for its first couple of seasons, Lost continues to be blissful mind candy, and I quite liked Mission Impossible III (though I seem to be one of the few who did). My reservations about Abrams’s Star Trek reboot aside, I’ll follow the man anywhere at this point. But I found his talk a frustrating ramble, full of half-told jokes and half-completed insights, shifting more or less randomly from his childhood love of magic tricks to the power of special effects to “do anything.” Along the way he shows a few movie clips, makes a lot of people laugh and applaud, essentially charming his way through a loosely-organized scramble of ideas that feel pulled from his back pocket.

More fool me for projecting so helplessly my own hunger for insider knowledge. What I wanted, I now realize, was stories about Cloverfield. Like many genre fans, I’m endlessly intrigued by the film, about which little is known except that little is known about it. The basic outline is clear enough: giant monster attacks New York City. What distinguishes Cloverfield from classic kaiju eiga like Toho’s Godzilla films — and this is what’s got interested parties both excited and dismayed — is the storytelling conceit: consisting entirely of “found footage,” Cloverfield shows the attack from ground level, in jumpy snatches of handheld shots supposedly retrieved from consumer video cameras and cell phones. Like The Blair Witch Project, which attempted to breathe new life into the horror genre by stripping it of its tried-and-true (and trite) conventions of narrative and cinematography, Cloverfield, for those who accept its experimental approach, may pack an exhilarating punch.

For those who don’t, however, the film will stand as merely the latest reiteration of the Emperor’s New Clothes, another media “product” failing to live up to its hype. And that’s what is ultimately so interesting about Abrams’s talk at TED: it embodies the very effect that Abrams is so good at injecting into the stories he oversees. In the manner of M. Night Shyamalan, who struggles ever more unconvincingly with each new film to brand himself a master of the twist surprise, Abrams’s authorship has become associated with a sense of unfolding mystery, enigmatic tapestries glimpsed one tantalizing thread at a time. One doesn’t watch a series like Lost so much as decipher it; the pleasure comes from a complex interplay of continuity and surprise, the marvelous sense of teetering eternally at the brink of chaos even as new symmetries and patterns become legible.

Abrams’s stories are like magic tricks, full of misdirection and sleight of hand. It drives some people crazy — they see it as nothing more than a shell game, and they ask, with some justification, when we’ll finally get to the truth, the Big Reveal. But as his talk at TED demonstrates, Abrams has always been more about the agile foreshadowing than the final result. It’s a style built paradoxically on the deferral, really the denial, of pleasure — a curious and almost masochistic structure of feeling in our pop culture of instant gratification.

Perhaps that’s where the TED talk’s value really resides. Gabbing about the “mystery box” — a metaphor promiscuously encompassing everything from a good suspense story to bargain-basement digital visual effects to the blank page awaiting an author’s pen — Abrams delivers no substantive content. But he does provide the promise of it: the sense that a breakthrough is just around the corner. It’s an authorial style suited to the rhythms and structure of serial television, which can give closure only through opening up new mysteries. Whether it will work within the bounded length of Cloverfield, that risky mystery box that will open for our inspection next Friday, remains to be seen.