The Soviet satellite Sputnik, launched fifty years ago today, is stitched into my family history in an odd way. A faded Polaroid photograph from that year, 1957, shows my older siblings gathered in the living room in my family’s old house. The brothers and sisters I would come to know as noisily lumbering teenage creatures who alternately vied for my attention and pounded me into the ground are, in the image, blond toddlers messing around with toys. There also happens to be a newspaper in the frame. On its front page is the announcement of Sputnik’s launch.

Whatever the oblique and contingent quality of this captured moment — one time-stopping medium (newsprint) preserved within another (the photograph) — I’ve always been struck by how it layers together so many kinds of lost realities, realities whose nature and content I dwell upon even though, or because, I never knew them personally. Sputnik’s rhythmically beeping trajectory through orbital space echoes another, more idiomatic “outer space,” the house where my family lived in Ann Arbor before I was born (in the early 1960s, my parents moved across town to a new location, the one that I would eventually come to know as home). These spaces are not simply lost to me, but denied to me, because they existed before I was born.

Which is OK. Several billion years fall into that category, and I don’t resent them for predating me, any more than I pity the billions to come that will never have the pleasure of hosting my existence. (I will admit that the only time I’ve really felt the oceanic impact of my own inevitable death was when I realized how many movies [namely all of them] I won’t get to see after I die.) If I’m envious of anything about that family in the picture from fall 1957, it’s that they got to be part of all the conversations and headlines and newspaper commentaries and jokes and TV references and whatnot — the ceaseless susurration of humanity’s collective processing — that accompanied the little beeping Russian ball as it sliced across the sky.

As a fan of the U.S. space program, I didn’t think I really cared that much about Sputnik until I caught a story from NPR on today’s Morning Edition, which profiled the satellite’s designer, Sergei Korolev. One of Korolev’s contemporaries, Boris Chertok, relates how Sputnik’s shape “was meant to capture people’s imagination by symbolizing a celestial body.” It was the first time, to be honest, I’d thought about satellites being designed as opposed to engineered — shaped by forces of fashion and signification rather than the exigences of physics, chemistry, and ballistics. One of the reasons I’ve always liked probes and satellites such as the Surveyor moon probe, the Viking martian explorer, the classic Voyager, and my personal favorite, the Lunar Orbiter 1 (pictured here), is that their look seemed entirely dictated by function.

Free of extras like tailfins and raccoon tails, flashing lights and corporate logos, our loyal emissaries to remoteness like the Mariner or Galileo satellites possessed their own oblivious style, made up of solar panels and jutting antennae, battery packs and circuit boxes, the mouths of reaction-control thrusters and the rotating faces of telemetry dishes. Even the vehicles built for human occupancy — Mercury, Gemini, and Apollo capsules — I found beautiful, or in the case of Skylab or the Apollo missions’ Lunar Module, beautifully ugly, with their crinkled reflective gold foil, insectoid angles, and crustacean asymmetries. My reverence for these spacefaring robots wasn’t limited to NASA’s work, either: when the Apollo-Soyuz docking took place in 1975 ( I was nine years old then, equidistant from the Sputnik launch that bracketed my 1966 birthday), it was like two creatures from the deep sea getting it on — literally bumping uglies.

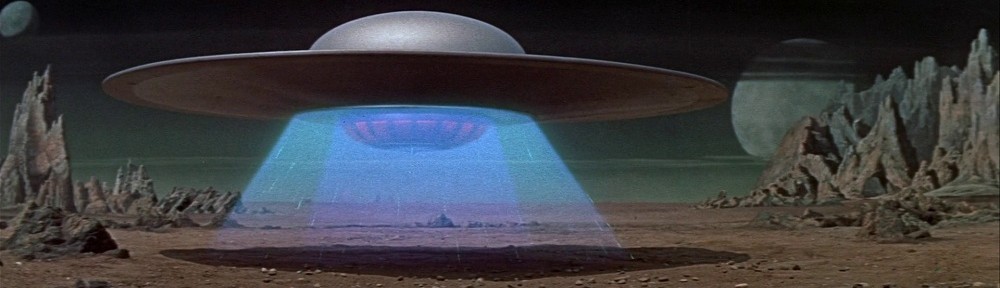

So the notion that Sputnik’s shape was supposed to suggest something, “symbolizing a celestial body,” took me at first by surprise. But I quickly came to embrace the idea. After all, the many fictional space- and starships that have obsessed me from childhood — the Valley Forge in Silent Running, the rebel X-Wings and Millennium Falcon from Star Wars, the mothership from Close Encounters of the Third Kind, the Eagles from Space 1999, and of course the U.S.S. Enterprise from Star Trek — are, to a one, the product of artistic over technical sensibilities, no matter how the modelmakers might have kit-bashed them into verisimilitude. And if Sputnik’s silver globe and backswept antennae carried something of the 50s zeitgeist about it, it’s but a miniscule reflection of the satellite’s much larger, indeed global, signification: the galvanizing first move in a game of orbital chess, the pistol shot that started the space race, the announcement — through the unbearably lovely, essentially passive gesture of free fall, a metal ball dropping endlessly toward an earth that swept itself smoothly out of the way — that the skies were now open for warfare, or business, or play, as humankind chooses.

Happy birthday, Sputnik!