This isn’t a review, as I haven’t yet made it to the theater to see Indiana Jones and the Kingdom of the Crystal Skull (portal to the transmedia world of Dr. Jones here; typically focused and informative Wiki entry here). What I have been doing — breaking my normal rule about keeping spoiler-free — is poring over fan commentaries on the new movie, swimming within the cometary aura of its street-level paratexts, working my way into the core theatrical experience from the outside in. This wasn’t anything intentional, more the crumbling of an internet wall that sprang one informational leak after another, until finally the wave of words washed over me like, well, one of the death traps in an Indiana Jones movie.

Usually I’m loath to take this approach, finding the twists and turns of, say, Battlestar Galactica and Lost far more compelling when they clobber me unexpectedly (and let me add, both shows have been rocking out hard with their last couple of episodes). But it seemed like the right approach here. Over the years, the whole concept of Indiana Jones has become a diffuse map, gas rather than solid, ocean rather than island. Indy 4 is a media object whose very essence — its cultural significance as well as its literal signification, the decoding of its concatenated signage — depends on impacted, recursive, almost inbred layers of cinematic history.

On one level, the codes and conventions of pulp adventure genres, 1930s serials and their ilk, have been structured into the series film by film, much like the rampant borrowings of the Star Wars texts (also masterminded by George Lucas, whose magpie appropriations of predecessor art are cannily and shamelessly redressed, in his techno-auteur house style, as timelessly mythic resonance). But by now, 27 years after the release of Raiders of the Lost Ark, the Indy series must contend with a second level of history: its own. The logic of pop-culture migration has given way to the logic of the sequel chain, the franchise network, the transmedia system; we assess each new installment by comparing it not to “outside” films and novels but to other extensions of the Indiana Jones trademark. Indy 4, in other words, cannot be read intertextually; it must be read intratextually, within the established terms of its brand. And here the franchise’s history becomes indistinguishable from our own, since it is only through the activity of audiences — our collective memory, our layered conversations, the ongoing do-si-do of celebration, critique, and comparison — that the Indy texts sustain any sense of meaning above and beyond their cold commodity form.

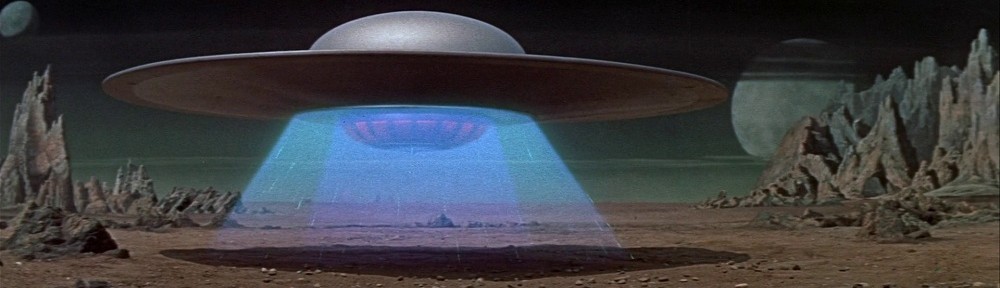

All of this is to say that there’s no way Indiana Jones and the Kingdom of the Crystal Skull could really succeed, facing as it does the impossible task of simultaneously returning to and building upon a shared and cherished moment in film history. While professional critics have received the new film with varying degrees of delight and disappointment, the talkbacks at Aint-It-Cool News (still my go-to site for rude and raucous fan discourse) are far more scornful, even outraged, in their assessment. Their chorused rejection of Indy 4 hits the predictable points: weak plotting, flimsy attempts at comic relief, and in the movie’s blunt infusion of science-fiction iconography, a generic splicing so misjudged / misplayed that the film seems to be at war with its own identity, a body rejecting a transplanted organ.

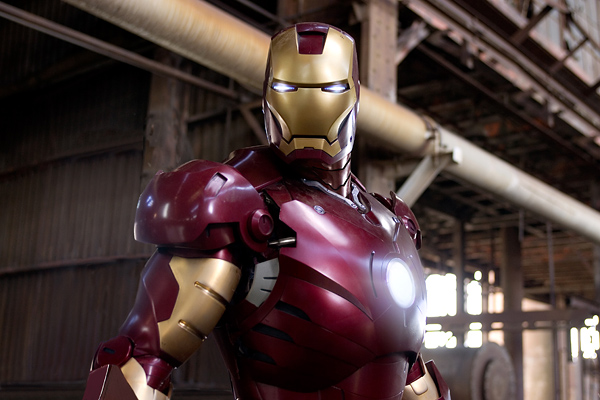

But running throughout the talkback is another, more symptomatic complaint, centering on the new film’s overuse of CG visual effects. The first three movies — Raiders, Temple of Doom, and Last Crusade — covered a span from 1981 to 1989, an era which can now be retroactively characterized as the last hurrah of pre-digital effects work. All three feature lots of practical effects — stuntwork, pyrotechnics, and the on-set “wrangling” of everything from cobras to cockroaches. But more subtly, all make use of postproduction optical effects based on non-digital methods: matte paintings, bluescreen compositing, a touch of cel animation here, a cloud tank there. Both practical and optical effects have since been augmented if not colonized outright by CG, a shift apparently unmissable in Indy 4. And that has longtime fans in an uproar, their antidigital invective targeted variously on Lucas’s influence, the loss of verisimilitude, and the growing family resemblance of one medium (film) to another (videogames):

The Alien shit didnt bother me at all, it was just soulless and empty as someone earlier said.. And the CGI made it not feel like an Indy flick in some parts.. I walked out of the theater thinking the old PC game Fate of Atlantis gave me more Indiana joy than this piece of big budget shit.

My biggest gripe? Too much FUCKING CGI. The action lacked tension in crucial places. And there were too many parts (more than from the past films) where Looney Tunes physics kept coming into play. By the end, when the characters endure 3 certain deaths, you begin to think “Okay, the filmmakers are just fucking around, lean back in your seat and take in the silliness.” No thanks. That’s not what makes Indiana Jones movies fun.

This film was AVP, The Mummy Returns and Pirates of the Fucking Carribean put together, a CGI shitfest. A long time ago in a galaxy far far away, Lucas said “A special effect is a tool, a means to telling astory, a special effect without a story is a pretty boring thing.” Take your own advice Lucas, you suck!!!

The entire movie is shot on a stage. What happened to the locations of the past? The entire movie is CG. What a disappointment. I really, REALLY wanted to enjoy it.

Interestingly, this tension seems to have been anticipated by the filmmakers, who loudly claimed that the new film would feature traditional stuntwork, with CGI used only for subtleties such as wire removal. But the slope toward new technologies of image production proves to be slippery: according to Wikipedia, CG matte paintings dominate the film, and while Steven Spielberg allegedly wanted the digital paintings to include visible brushstrokes — as a kind of retro shout-out to the FX artists of the past — the result was neither nostalgically justifiable or convincingly indexical.

Of course, I’m basing all this on a flimsy foundation: Wiki entries, the grousing of a vocal subcommunity of fans, and a movie I haven’t even watched yet. I’m sure I will get out to see Indy 4 soon, but this expedition into the jungle of paratexts has definitely diluted my enthusiasm somewhat. I’ll encounter the new movie all too conscious of how “new” and “old” — those basic, seemingly obvious temporal coordinates — exceed our ability to construct and control them, no matter how hard the filmmakers may try, no matter how hard we audiences may hope.