To anyone interested in how old image technologies date, I highly recommend Laura Mulvey’s short essay in the Spring 2007 Film Quarterly (Vol. 60, No. 3, Pages 3-3) entitled “A Clumsy Sublime.” (The link is here, but you may need special privileges to access it; I’m writing this post in my office, from whose campus-tied network a vast infospace of journals and databases is transparently visitable.) Mulvey writes about rear-projection cinematography, that trick of placing actors in front of false backgrounds — think of scenes in films from the 1940s and 1950s in which characters drive a car, their cranking of the steering wheel bearing no relationship to the projected movie-in-a-movie of the road unspooling behind them. Nowadays these shots are for the most part instantly spottable for the in-studio machinations they are: nothing makes an undergraduate audience snicker more quickly than the sudden jump to a stilted closeup of an actor standing before an all-too-grainy slideshow. Mulvey deftly dissects the economic reasons that made rear-projection shots a necessity, but the real jackpot comes in the essay’s concluding paragraph, where she writes:

This paradoxical, impossible space, detached from either an approximation to reality or the verisimilitude of fiction, allows the audience to see the dream space of cinema. But rear projection renders the dream uncertain: the image of a cinematic sublime depends on a mechanism that is fascinating because of, not in spite of, its clumsy visibility.

With newly fine-grained methods of compositing images filmed at different spaces and times — the traveling matte was only the first step on a path to digital cut-and-paste cinema — rear projection is rarely seen anymore. But as Mulvey observes, “As so often happens with passing time, [rear projection’s] disappearance has given this once-despised technology new interest and poignancy.”

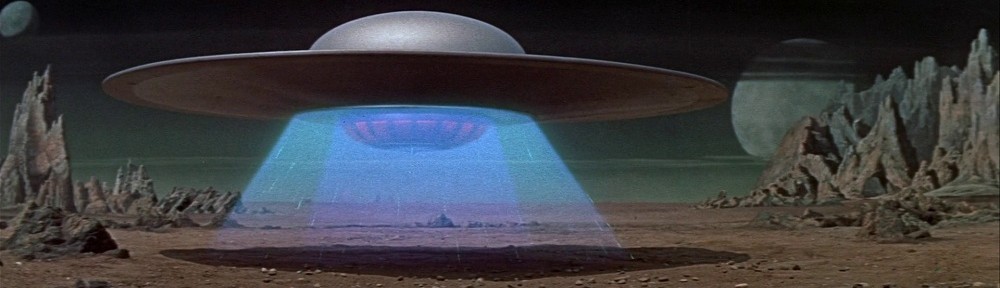

Her point is equally applicable to a range of filmmaking techniques, especially those of special effects and visual effects, which invariably pay for their cutting-edge-ness by going quickly stale. (Ray Harryhausen‘s stop-motion animation, for example, “fools” no one now, but is prized, indeed cherished, by aficionados.) But I’d like to extend the category of the clumsy sublime to another medium, the videogame, which has evolved much more rapidly and visibly than cinema: under the speeding metronome of Moore’s Law, gaming’s technological substrate is essentially reinvented every couple of years. Games from 2000 can’t help but announce their relative primitiveness, which in turn looks cutting-edge next to games of 1995, and so on and so on back to the circular screen of the PDP-1 and the rastery vessels of 1962’s Spacewar. Yet we don’t disdain old games as hopelessly unsophisticated; instead, a lively retrogame movement preserves and celebrates the pleasures of 8-bit displays and games that fit into 4K of RAM.

Two examples of videogames’ clumsy sublimity can be found on these marvelous websites. Rosemarie Fiore is an artist whose work includes beautiful time-lapse photographs of classic videogames of the early 1980s like Tempest, Gyruss, and Qix. Diving beneath the surface of the screen, Ben Fry’s distellamap traces the calls and returns of code, the dancing of data, in Atari 2600 games. What I like about these projects is that they don’t just fetishize the old arcade cabinets, home computers and consoles, cartridges and software: rather, they build on and interpret the pleasures of gaming’s past while staying true to its graphic appeal and crudely elegant architecture — the fast-emerging clumsy sublime of new interactive media.

I get frustrated by the way new computer games have ever increasing system requirements, yet the added “realism” that drives these increased requirements often adds little to my enjoyment of the game. My frustration comes in part from the fact that I can’t afford to keep buying faster computers just to keep up with the latest games, but even if money were no object, cinematic realism adds nothing to many games. But I guess it does depend on the genre. If I’m playing a strategy game, I don’t want to see a little simulated battle on the board when I make a move. That just slows things down. I’m perfectly content with abstract representations in a strategy game. OTOH, I want a flight simulator to be as realistic as possible. But then that’s the difference between a game and a simulation.

Mike: I agree with you that different games require different levels of — and approaches to — graphics, and that a mismatch between game type and graphic form results in our sense of a flaw somewhere in the system. Excessive graphic sophistication, a 3D immersive environment for Tetris, is kind of like seeing a B-movie concept given A-movie treatment — Laurence Olivier slumming in a role and story that should have gone to Chuck Norris. With the added frustration that we paid big $$$ to play a game that would have played easier, simpler, and cheaper on the screen of our cell phone.

But that’s part of the answer right there: the proliferation of tiny screens and more limited memory capacities that are continually introduced into the gaming ecosystem through new, smaller devices. The irony is that, once released, these devices quickly launch into their own exponential improvement curve, with subsquent waves of miniaturization making it possible to stuff more and more, faster and faster, into the same space. The kind of excessive graphic realism to which you object seems an inevitable trend, a kind of genetic predisposition, in games and the devices that are their homes. One of the places where we buck that trend, I think, is the retrogame movement — emulators and the like — which enable us to revisit a time when games were pleasingly simple and direct (or so they look to our jaded, dazzled eyes).

My question is: Do you see this as being a recent development in gaming’s techno-economics? That is, have system requirements accelerated steeply over the last few years, forcing a kind of arms race toward some hypothetical finish line of “total” realism, or have these processes always been in place? I ask because, having grown up in the 1970s and 1980s, you and I witnessed a series of stages in the graphic evolution of games that must have seemed revolutionary to us at the time — the movement from text-based systems to display-based systems, the introduction of color and sound, 8-bit architecture giving way to 16-bit and later 32-bit and so on. I think, in short, that there’s always been a certain sense of vertigo about “where things are headed,” which creates a paradox: is a perpetual state of change a state of change at all?

I have an article on this subject (looking specifically at the emergence of the “3D engine” alongside the first-person shooter in the early 1990s) coming out in a collection called Videogame/Player/Text, edited by Barry Atkins and Tanya Krzywinska for Manchester University Press. (Here’s a link to the UK Amazon listing, if you’ve got 18 quid burning a hole in your pocket.) In a future post, I’ll talk more about this publication in hopes of continuing the discussion you’ve started — it’s an area of game studies I find particularly compelling, and which, IMO, hasn’t received enough attention.

I’m having difficulty formulating an answer to your question. I think the answer might simply be “yes” because of curve of the underlying technical advance–what’s that theorem that says that computing speed will increase by a such-and-such a factor every so many years (Bell’s?). I.e, yes, these processes have always been in place, but also yes, it has increased more steeply in recent years (following the curve of the underlying increase in technical capacity, which–I think–is an ascending curve, but I’m not well-informed on this), and yes, the arms race is an appropriate metaphor, up to a point. Tracking the underlying technical progress is complicated further when we consider not only computing speed and memory but the introduction of the Internet and possibilities for multiplayer interaction.

A quick aside: text-based systems did not predate display-based systems, did they? I assume you’re referring to things like MUDs which I thought came after Pong and the old Atari-type games, but before 3D videogames.

This question is hard for me to think about because I’m thinking about it as a gamer, not necessarily a *video*gamer or student of computer media. Thinking about computing in relation to gaming, with the aforementioned genre considerations added in, makes me think of one of those diagrams of connected bubbles, and it gets pretty complicated with all the interconnections.

Oh, and I can’t take credit for starting this thread.

Whenever I see that “Heavenly Sword” commercial, I think of this thread. Then I think, behold the future of porn. (Or present? I am probably behind the times, as usual.)

Mike, your post prompted me to go looking for info on Heavenly Sword — I wasn’t able to find an ad per se, but did locate lots of lovely video. Thanks for putting me onto it.

On the subject of porn, games, and graphics, there is a lot to talk about — enough that it really does need its own new thread. I’ll say this much: the future of interactive porn is definitely the Wii.